We have written about our Augmented Reality projects before, here, here, and here. But we never talked about one of our original case-studies that motivated us to start working with AR in 2018: visualizing the process of reconstructing a human face from fragments of an excavated skull of a Russian soldier who died in the battle of Castricum in 1799. This was an unfunded side-project, an experimental case meant to get to grips with AR technology, which is why it had been lying around nearly finished for over a year. But we finally got around to make an improved version, thanks to the spillover of lessons learned in the Blended Learning projects. In this post I’d like to discuss the project background and process of creation.

Battle of Castricum, 1799

In 1799 a war took place in Holland that we don’t learn about in Dutch history class in school, hence referred to as ‘the forgotten war‘. The Dutch were under French rule, and their joint armies clashed with those of Great Britain and the Russian Empire in the dunes near Castricum. The casualties were high and many soldiers found their death in these dunes. They were buried there in simple graves, wearing their uniform. Occasionally, a grave is found by accident and excavated. The nationality of the soldier can usually be derived from buttons, the only surviving pieces of the uniform.

Visualizing archaeological interpretation

The reconstruction of a face based on an excavated skull is an intricate process that combines forensics, archaeology, and anatomy with the art of sculpting. When so many disciplines are involved, some already rare in itself, it may not be surprising that this skill is not widely spread amongst humanity. Nevertheless, it is an extremely important aspect of our study of the past, as it gives a face to people who lived many years ago in societies we only know from their material remains. One of the people with these skills and expertise is Maja d’Hollosy, who works at ACASA as a physical anthropologist, but is also a freelance facial reconstruction artist. Her work has been featured in many archaeological exhibitions in the Netherlands and even on national television. The popularity of these reconstructions is not hard to fathom: there is something magical about looking in the eye of a person who lived thousands of years ago, modelled to such a degree of realism that it is hard to distinguish from a real person.

But these kind of reconstructions are often met with questions from the public: how do you know how a face looked like just by studying the skull? Would this person really have looked like this? This sure is very speculative? These are valid questions, that in fact pertain to all archaeological interpretation: how can we be so sure? As we often can’t, the least we can do is to be honest about our method and assumptions. In the case of the physical anthropological method of facial reconstruction, this is certainly not a complete gamble. Human facial features strongly correlate with the underlying bone structure, and facial reconstruction is for a large part a matter of applying statistics on muscle and skin thickness. On the other hand, skin colour and facial hair cannot be read from the bones of course.

Still, this part of our work, the art of reconstruction and interpretation, remains often underexposed in public outreach. The usual excuse is that ‘the public’ isn’t interested in learning how we got there, they just want the final picture. We don’t think this is true, at least not for all of the public. Loeka Meerts, an archaeology student at Saxion, University of Applied sciences, did a study into the possibilities of using AR for presenting archaeological facial reconstructions, and found that over half of the respondents (n = 42) were interested in learning more about how these facial reconstructions are made.

This is where we believe Augmented Reality can come to play a role. AR offers a way to enrich and superimpose reality with a layer of additional visual information. So why not use it to visualise the process of interpretation on top of a target object, the reconstructed fact?

The idea

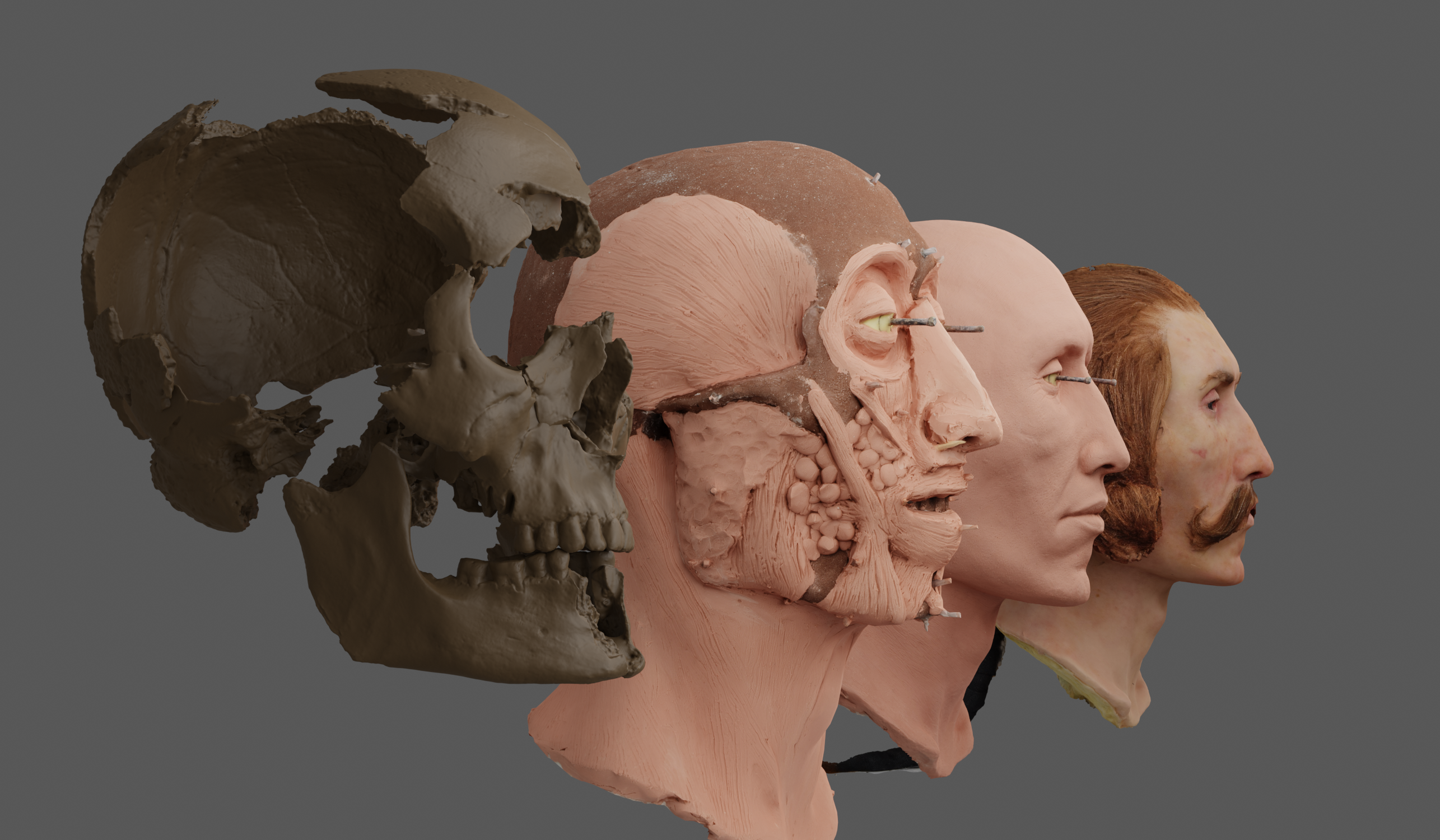

The aim of the AR is to visualise the steps taken by Maja for the reconstruction of a face, from archaeological remains to full reconstruction including skin colour and hair. The basic mechanism is very simple: a user points a mobile device at a target, a 3D printed version of the reconstructed skull, and the original fragments of the incomplete skull appear on top of it. From then on, the user can swipe his or her way through the process of facial reconstruction. The user can walk around and view the reconstructions, digital 3D models of Maja’s work, on all sides. The videos on the side demonstrate the app.

3D scanning and photogrammetry

To make this possible, we needed 3D models of each of the steps in the reconstruction. The original bone fragments had already been scanned with a high resolution 3D scanner (the HDI advance R3x ). Maja needed this scan for a 3D print of the fragments, which she used as the foundation for the sculpting process. Next, we chose six steps that are essential in the facial reconstruction process:

- the reconstruction of the fragments into a complete skull

- the placement of tissue thickness pins

- the modelling of muscle tissue

- the application of skin

- the colouring of the skin

- the application of (facial) hair

Each of these steps was recorded using . About 140 photos were taken in three circles around the subject. This number of photos give good quality high resolution 3D models with no occluded parts. The photos were processed in Agisoft Metashape. The sculpts are easy photogrammetry subjects, as they have much detail that can be used by the software to match photos, and they contain hardly any shiny or transparent parts. The results were generally very good, although a problem does exist with some of the transparent tissue thickness pins of step 2. Also, hair is a notoriously difficult material to reconstruct photogrammetrically, so the last step did not come out the neatest. All such issues could of course be fixed with manual tweaking of the models in 3D modelling software. However, correcting such defects, especially the hair, requires manual editing of the 3D model and textures, which is quite a time-consuming task. So we left that for another moment.

The photogrammetrical reconstruction results in very , which need to be simplified for display in an app that should run on a phone. Each scan was thus to 50.000 faces. Still a sizeable number, but it is manageable by most devices. Although you lose geometric detail, this is hardly noticeably as the generated photo-textures bring back all visual detail. Besides photo-textures, also ‘normal’, and ‘ambient occlusion’ maps were generated based on the high resolution models. These are used to create the illusion of depth on the small scale, such as bumps and pores of the skin, which were lost due to the decimation process.

The AR app

The next step has been to create the Augmented Reality application. The AR software we used was Vuforia. Vuforia is an AR engine, which means it just takes care of the target recognition. To display the 3D models and to build user functionality, you need a game engine. Vuforia is well-supported by the Unity game engine, so Unity was a logical choice. The reason for choosing Vuforia, was that in 2018 it had just introduced an exciting new feature: 3D object recognition. In older AR, you would need a 2D image or QR-code, to act as trigger and target for the placement of the AR model. With 3D object recognition, you use a 3D model of the actual object as a trigger. This does not work out of the box. You need a 3D model of the physical target, and if you want 360-degree recognition, this has to be run through a ‘training session’. This is basically a machine-learning algorithm that analyses the object and stores a series of target images in a database. This database is imported in Unity, where you set up an AR camera and lighting, the 3D models, materials, and program user interaction. The latter was done by Markus Stoffer, student assistant at the 4DRL and specializing in AR/VR.

The target that we used is a 3D model of the first step in the facial reconstruction, the bone fragments reconstructed to a complete skull. The target was 3D printed in on the Ultimaker 2+, and painted in skull-colours afterwards.

The 3D models used in the AR application.

Improvements and the future

In the current app a user simply skips between the steps that show the process of facial reconstruction. Because the original focus was on learning how to work with Vuforia/Unity, and to create a nice AR example that we could easily show-case in the lab, we did not add an informative layer. For use as an educational tool in a museum environment, this could be a useful next step. Adding text, or probably better still, audio, giving background information about the steps taken by the sculptor, is a relatively small effort. The curator at Huis van Hilde, the museum which houses the archaological finds and the reconstruction of the Russian soldier, has shown interest in exploring options for the actual implementation in the exhibitions.

An interesting question is whether an AR app satisfies the needs of a museum audience. In Loeka Meert’s survey, museum visitors were interviewed about their preferred medium of learning more about the reconstruction. Only 20% of the respondents chose ‘an app on their phone’, while the largest percentage of interviewed museum visitors (41,40%) chose to be informed through a digital screen next to the face reconstructions. It is likely that familiarity with digital screens in a museum setting as opposed to AR apps influenced the outcome of the survey. Regardless of the causes, to make visitors use new innovative technology requires a seamless user experience.

In that respect, one element that should certainly improve is the target recognition. For instance, we have been struggling to get the smaller keychain model target (see video above) to work. It appears that the size has an impact on the recognizability, but why and how this works remains unclear. Vuforia’s algorithms are closed-source, and it is hard to see what exactly is causing problems. Also our other experiments with AR in a museum showed that target recognition and visual stability was an unpredicable factor and varied from object to object. However, target recognition and visual stability of the augment are very important elements when it comes to user experience. In that sense the AR technology still has some way to develop before 3D object recognition can function without problem on our mobile devices.