The Bonnefanten Museum in Maastricht houses the largest ensemble of Hortisculptures by the Dutch artist Ferdi (1927-1969). These colourful and eclectic sculptures have unfortunately experienced damage over time and are undergoing restoration by independent conservators, both at the Bonnefanten and at a studio in Amsterdam.

Aims

One such sculpture, the Shigiory Torinata, is a tall flower made from silk and artificial fur. It has tentacle like petals, a long stem, and rests in a wicker basket with leaves protruding out of it. The stem shows the majority of the damage, with tears in the fabric and along the seamline, as well as discoloration due to sun damage. In order to conserve this piece, the tears have to be sown and the discolouration restored.

For these reasons, the Shigiory Torinata has been chosen to be restored with the help of 3D methods at the 4D Research Lab. Due to the stem's altered material, the pattern needs to be first documented in 3D, then virtually reconstructed, and finally printed onto new fabric that can be sown back onto the sculpture - without removing or modifying the original fabric.

Documentation

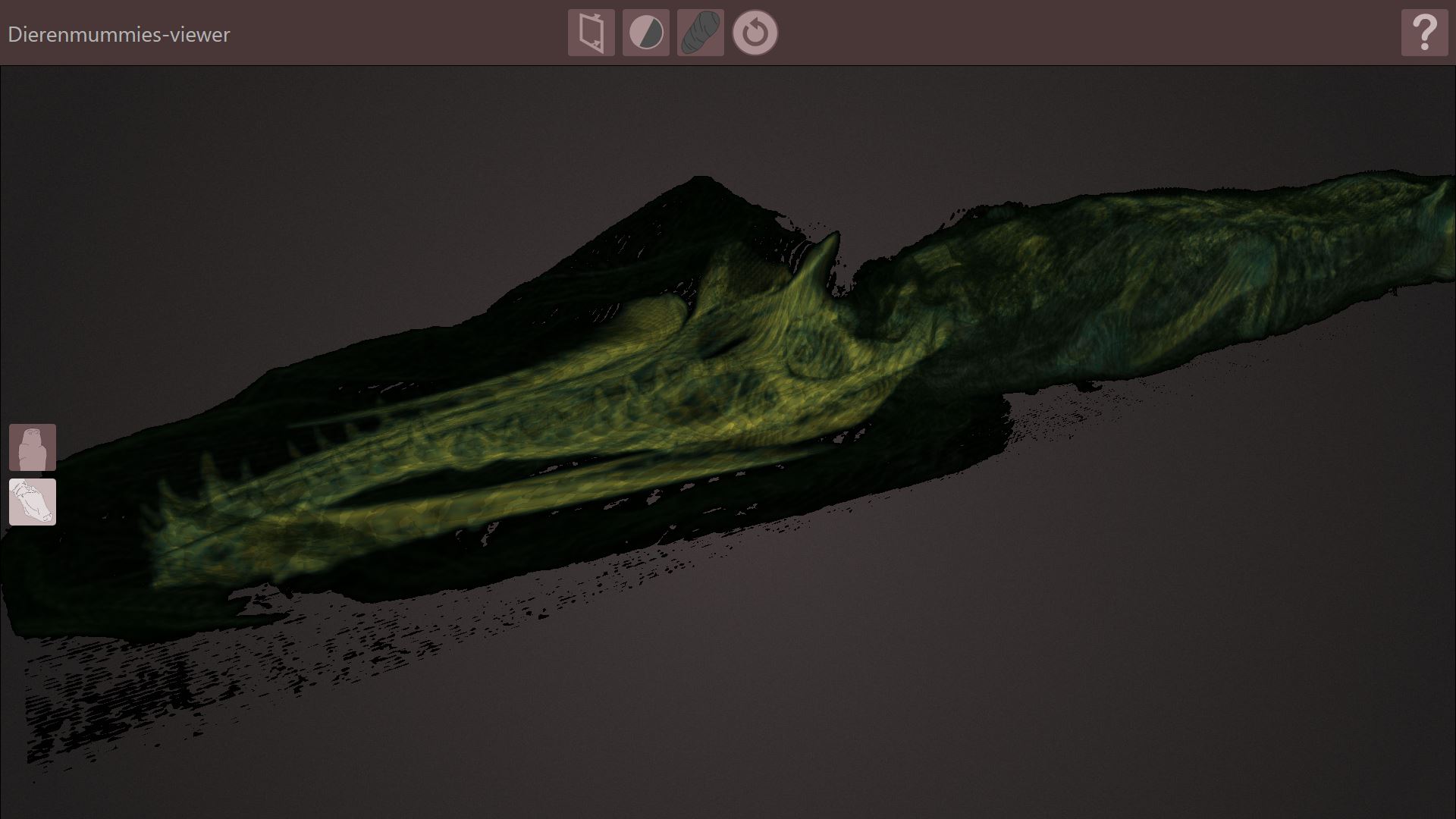

In order to document the stem's intricate pattern, the Artec3D Leo was used to scan the geometry with the texture. This handheld, structured light scanner offers a fast and high-resolution result.

Fortunately, the colour patterns displayed on the stem coincide with the patterns on the petals, which have experienced less light damage. These preserved colours allow us to replicate the pattern from the stem. After 3D scanning, colour measurements were taken of these petals using the Nikon D5300 camera, ring flash AR400, and a double polarised lens.

Digital Restoration

The next step after documenting the stem is to UV unwrap the pattern on Blender. However, since the fabric on the sculpture has wrinkled and bunched up in areas over time, it does not virtually unwrap flat. This causes distortion in the pattern, which has to be taken into account while reconstructing it digitally.

A 3D cylinder was created and aligned with the original stem and seam lines were assigned to it so that when the texture is projected onto this reconstructed cylinder, it can be unwrapped along those lines. This results in a flattened rectangular projection of the fabric.

The projection was transferred into Inkscape, an open source graphics editor. There the pattern was traced using curved line tools. Images from the tentacles with preserved colours were transferred into this workspace, and using the eyedropper tool, the correct colour was identified from each pattern. Colour swatches were created and these served the basis for reconstructing the colours of the stem. Twelve colours were identified in total, which will be verified in accuracy by the conservators working with these Hortisculptures.

Results

Once the pattern has been reconstructed, we brought it back into Blender and reapplied to its cylindrical stem by baking the pattern onto the original fabric and applying a silk texture map. In this way, we can visualise how the stem will look with its vibrant colours restored once the fabric is printed.

Credits:

Bonnefanten Museum

- Charlotte Franzen, Head of Collections

- Paula van den Bosch, Senior curator contemporary art

4D Research Lab

- Tijm Lanjouw, Senior 3D Modeller

- Alicia Walsh, Junior 3D Modeller

Conservation and Restoration

- Ellen Jansen, University of Amsterdam, Independent conservator

- Kaltja van de Braak, Independent conservator

Shinkichi Tajiri Estate