Hugo Huurdeman, Jill Hilditch, Jitte Waagen

Why evaluation matters

From the outset the 3DWorkSpace project has focused on the evaluation of the platform. Why? As a multi-user environment that ultimately should benefit education and research by enabling tools to learn about 3D datasets, it is of paramount importance to understand how well our platform actually performs in this respect. This information is extremely important to check usability, fine-tune functionality, discuss its potential for implementation in education and research and, of course, eventually to assess our efforts and investments and determine future directions.

From the start, we envisioned that we would like to find answers to various questions, such as:

- To what extent does the platform support easy interaction with 3D datasets?

- To what extent does it encourage more in-depth engagement with 3D datasets?

- To what extent is 3DWorkSpace a viable platform for education or research?

- What are regarded as the most useful features of the platform?

- What potentially useful features are not yet incorporated?

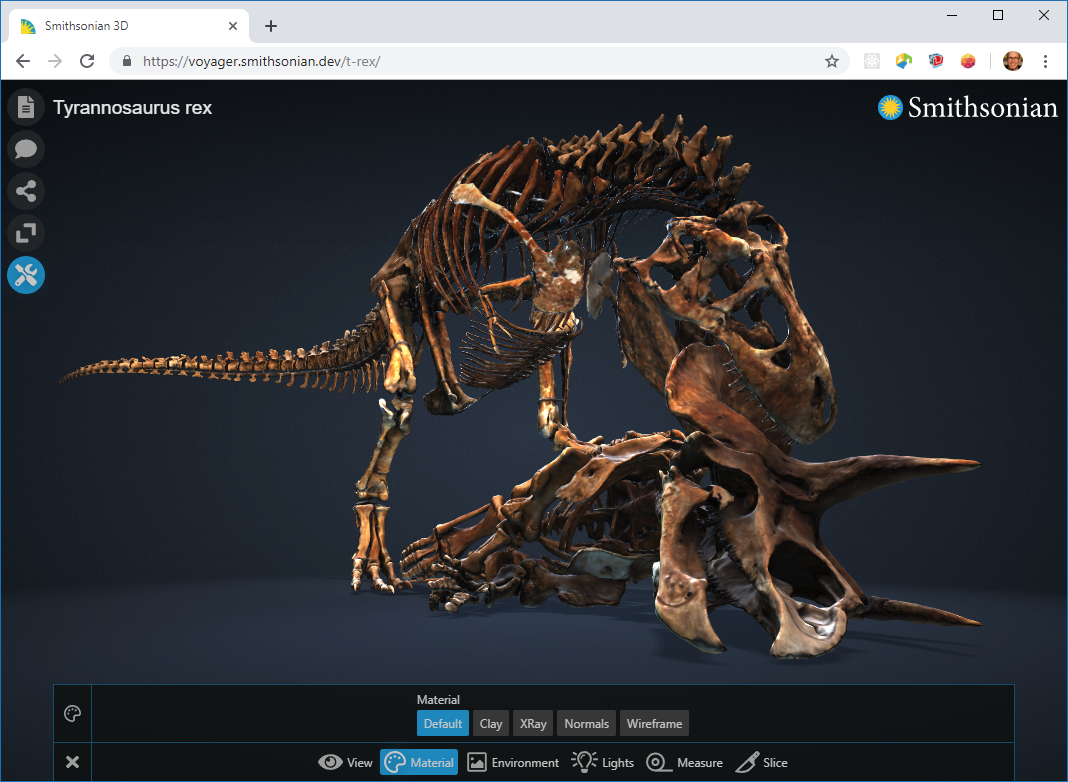

To this end, we designed an evaluation strategy that includes a full range of potential users, from researchers to teachers to students, as well as a selected group of experts in the domain of ICT and education, digital archaeology and also the 3D Program team of the Smithsonian itself – the creators of the Voyager 3D toolset integrated into 3DWorkSpace.

Evaluation design: a plan in two parts

In order to collect useful evaluation data, we designed both quantitative and qualitative evaluations.

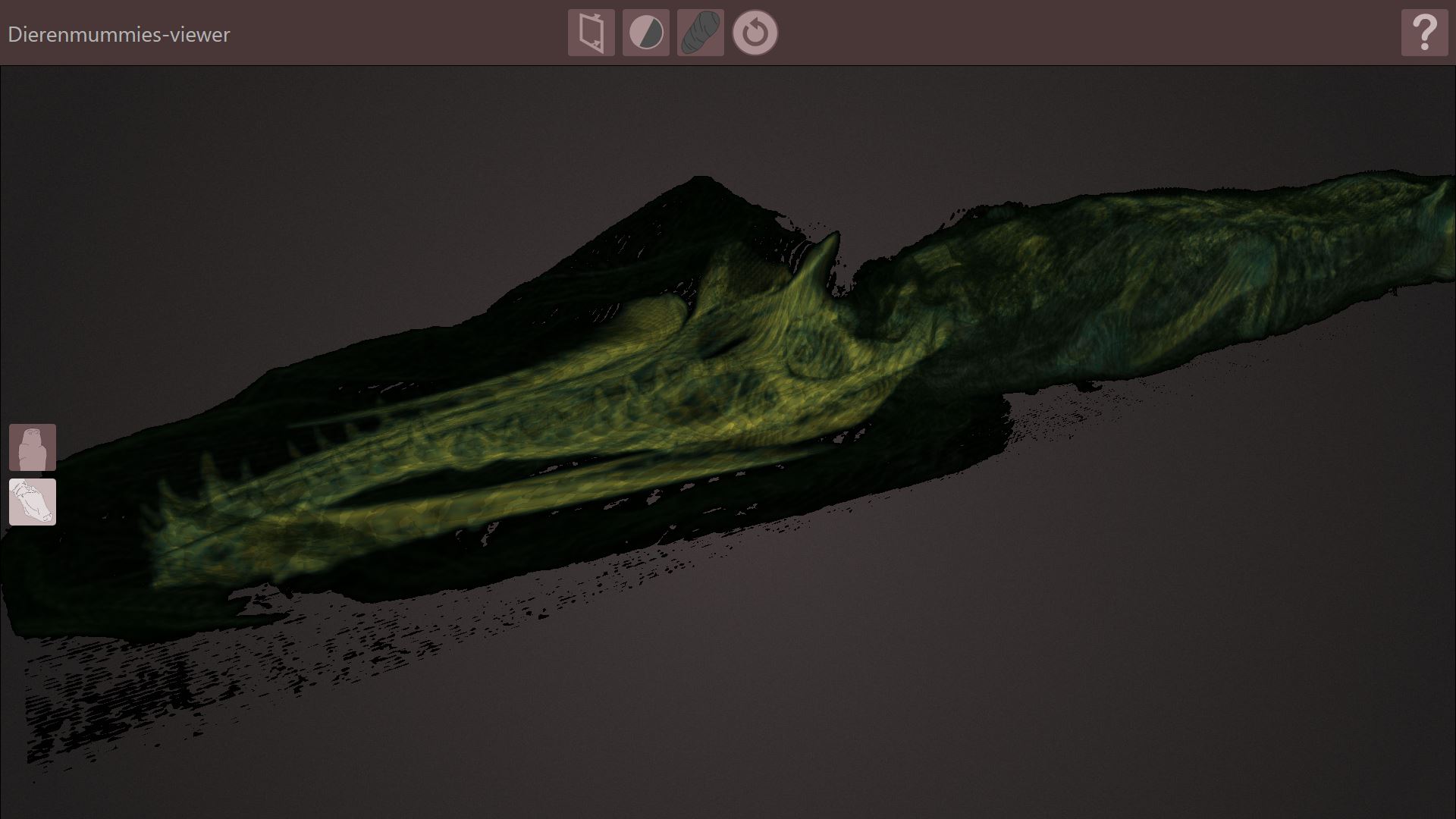

The first part was an expert evaluation that was planned rather early in the development process, after having a first concept of the 3DWS platform online, but before the final phase of development, bug-fixing and fine-tuning. We invited a combination of hand-picked and suggested reviewers that were selected based on their respective expertise within and outside archaeology and with different specializations. We asked our reviewers to follow a structured online survey (using Limesurvey) that included a project aims statement and a set of dedicated screencasts running them through the 3DWS platform at its state of development at that moment. After a round of demographic questions, the participants engaged with the platform themselves. Subsequently, they filled out additional questionnaires about their experience in using 3DWorkSpace. These involved the System Usability Scale (SUS), a validated usability survey (Brooke, 1996) as well as a set of more qualitatively oriented questions.

Six experts participated in the usability survey, which means that we could meet the generally accepted minimum number of participants to generate a quantitative SUS-score (see e.g., Virzi, 1992). The subsequent qualitative questions were aimed at generating expert opinions on whether the 3DWS platform was fit-for-purpose, as well as receiving feedback on implementation possibilities and detailed information on potential shortcomings. In this way, we hoped to gather useful information from a group with a broad perspective and good insight-knowledge on 3D, digital methods and heritage.

The second part of the evaluation was a focus group with students, i.e., one of the intended end-user audiences. This was organized in a later phase of the project using a more refined version of the 3DWS platform, which contained elaborate examples of learning pathways (specific sequential guided activities aimed towards achieving competence) using the 3D model collections within the platform. Participants were students that responded to an advertisement for participation. As the results from the evaluation's introductory questionnaire indicate, these students had varied experiences with 3D datasets in the course of their studies. During the focus group session, the students were introduced to the project and the platform, watched screencasts of 3DWorkSpace, and were then presented with a case study on forming traces in pottery production in antiquity. Finally, they completed similar usability questionnaires as the experts. More importantly, we had a plenary discussion on the 3DWS platform to get their perspective. The nature and role of the learning pathways presented to the students are the focus of Blogpost 3 for the 3DWS project.

Results expert assessment

The expert participants in our survey formed a diverse group in terms of their fields of study (Earth Sciences, Archaeology, Heritage Management, Computer Science, Information Science and Engineering), but most participants had obtained postgraduate qualifications in their chosen fields and, ultimately, were working under the purview of applications in computer science/visualisation or archaeological research. After trying out the 3DWorkSpace toolset, the participants first filled out the SUS, which consists of a set of 10 questions (for instance: "I think I would like to use this system frequently", and "I found the system unnecessarily complex"). From the answers, given on a scale of 1 to 5, the final score for the System Usability Scale is calculated. This score is measured in a range of 0 to 100, where a minimum score of 52 is considered "OK" and a score above 70 is considered as "Good" (see e.g. the empirical evaluation by Bangor et al., 2008).

The System Usability Scale score for the 3DWorkSpace platform was 74,64, based on the questionnaires completed by our group of six experts. Thus, the usability of the platform may be considered as good, even though individual questionnaire items indicated further potential for improvement.

This was further explored in the next part of the questionnaire, with specific open questions about the usability of 3DWorkSpace. Participants indicated that their overall experience with 3DWorkSpace was "positive", that the system was "easy to navigate" and "made the artifacts and models tangible". Specific features, such as the learning pathways, annotation features and possibilities for collection making were generally seen as the most useful features of 3DWorkSpace. The 'live' 3D model views were also seen as a useful addition, even though this according to several participants resulted in slowdown of their browser, due to the needed hardware resources for showing multiple 3D viewer panels simultaneously. Some concerns occurred regarding the workflows (for instance when adding learning pathways) and the ability to change other people's collections in the current prototype. A number of concrete suggestions for improving features were provided, for instance adding more visual elements to the now largely textual learning pathways. Additionally, feedback for improving the user interface and user experience was given, pointing at the naming of functionality, the contents of menus and the organization of features. These suggestions provide a wealth of useful feedback for future improvements of 3DWorkSpace.

The next part of the survey looked at the purposes of the 3DWorkSpace project and the ability of the created tools to meet the project's goals: develop an online platform for interacting with 3D datasets and explore its potential to offer structured guidance, stimulate discussion and advance knowledge publication. Generally, the tool was deemed by the experts as appropriate for reaching the project's goals. One participant mentioned that it "certainly makes it easier to engage with 3D datasets through the viewer and the rich annotation and documentation system". Another referred to the possibility of allowing "multiple people to create their own annotations and interpretations of the same datasets" as a crucial element. This was underlined by another participant: the tool facilitates "co-creation of and transfer of knowledge", in both didactic and science dissemination contexts.

Specific observations made by the experts on the placing and visibility of models, information texts, additional hyperlinked content and more, were useful for considering how to maximize engagement with the integrated datasets. One expert asked if gamification of the learning pathways (questions and scoring) might encourage wider or more in-depth engagement with the 3D models, in educational and heritage-based contexts. Another comment bridging usability and engagement potential suggested including ‘info-tips’ to briefly show the functions and capacities of the tools on offer, or prompts to remind users of the different ways they could interact with the models and collections. Further, it was emphasized that the structured guidance contained in the learning pathways needed to be tested more systematically in a pedagogical context. A first step in this regard will be described in Blogpost 3 focusing on the learning pathways in 3DWorkSpace and their evaluation.

Many comments looked ahead to scaling up the 3DWS prototype and raised concerns regarding data integrity, and data visibility for different user groups. Maintaining the integrity of uploaded collections with curated annotations and navigation was a key issue for considering reuse of the models and collections, as well as publication rights. The potential to develop different user profiles with greater or lesser powers of editing and to restrict access to collections containing unpublished 3D models among only authenticated collaborators were also suggested as future avenues for safeguarding integrity issues on the platform.

Overall, the broad interdisciplinary appeal of the 3DWS platform was commented upon, where any sharing or inspection of 3D datasets holds importance for moving knowledge and collaboration forward (such as medicine and geosciences, among others). The commenting feature and ability to add notes on the 3D models was also found to open up important space for new dialogues and knowledge sharing, bringing future appeal to such a platform across a broad range of contexts.

Conclusion and discussion

This blogpost outlined one of the key focal points of the 3DWorkSpace project: evaluation. An expert evaluation of the platform resulted in a SUS usability score of 74, which represents “good” usability. In addition, the qualitative parts of this study showed many positive aspects, for instance the ease of navigation and the 3DWorkSpace platform’s facilitation of co-creation of knowledge. Naturally, also potential points for improvements were identified, for example regarding editing workflows and technical aspects of the platform.

In the next blogpost, we will shift our focus to the evaluation conducted with students and discuss the nature and role of the learning pathways introduced in 3DWorkSpace.

References

Bangor, A., Kortum, P. T., & Miller, J. T. (2008). An empirical evaluation of the System Usability Scale. International Journal of Human-Computer Interaction, 24(6), 1–44. https://doi.org/10.1080/10447310802205776

Brooke, J. (1996). SUS: A “quick and dirty” usability scale. In P. W. Jordan, B. Thomas, B. A. Weerdmeester, & A. L. McClelland (Eds.), Usability Evaluation in Industry. Taylor and Francis.

Virzi, R. A. (1992). Refining the Test Phase of Usability Evaluation: How Many Subjects Is Enough? Human Factors, 34(4), 457-468. https://doi.org/10.1177/001872089203400407